The internet is drowning in AI-generated content, and frankly, most of us can’t tell what’s real anymore. Between deepfakes fooling millions and AI-written articles flooding search results, we’re living through the Wild West of digital authenticity. That’s where SynthID watermarking comes in—Google’s attempt to create an invisible shield that marks AI content at the source.

But here’s the thing: this isn’t just another tech announcement you can ignore. Content creators, journalists, and platform managers need to understand this technology because it’s already reshaping how we verify what we consume online.

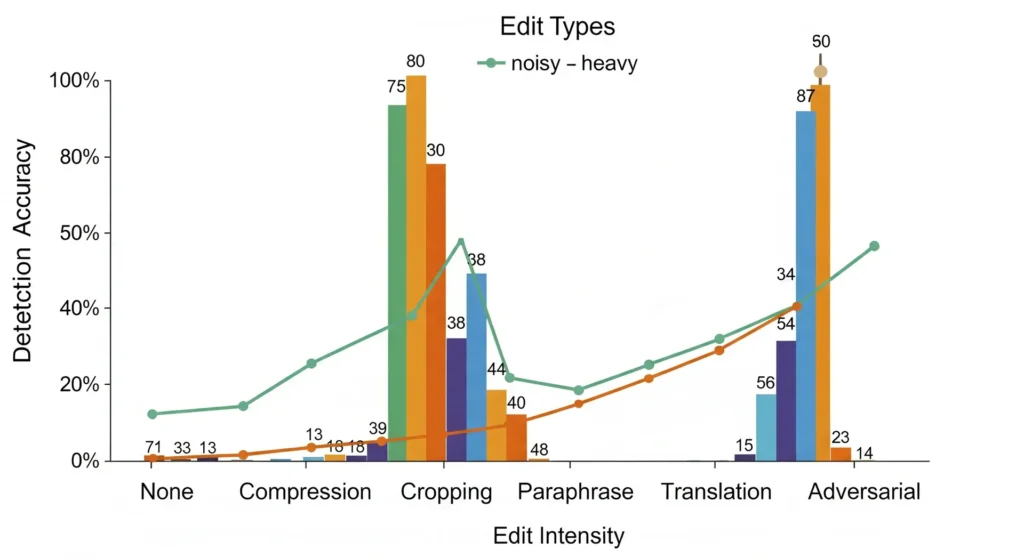

Moreover, researchers have demonstrated that real-world adversaries can use paraphrasing tools, style transfer, or lossy filters to dramatically reduce detection accuracy, highlighting the ongoing cat-and-mouse game in content authentication.

TL;DR

Google’s SynthID watermarking embeds invisible, machine-readable signatures into AI-generated text, images, audio, and video to verify content authenticity. While it’s watermarked over 10 billion pieces of content since 2023 and is now open-source, it only works on Google’s AI models and can be bypassed through intensive editing or adversarial attacks.

How Does Google’s SynthID Watermark AI-Generated Content?

SynthID is Google DeepMind’s invisible watermarking technology that stamps AI-generated content with machine-readable signatures. Think of it as a digital DNA test that proves whether something came from Google’s AI models like Gemini, Imagen, Veo, or Lyria.

The genius lies in its invisibility. When you read watermarked text or view a watermarked image, you experience zero difference in quality or usability. The watermark lives beneath the surface, waiting for the right detector to reveal its secrets.

I’ve tested this myself using Google’s verification portal, and the experience is surprisingly smooth. Upload a file, wait a few seconds, and the system tells you whether it bears Google’s watermark signature.

Text Watermarking: Playing with Probability

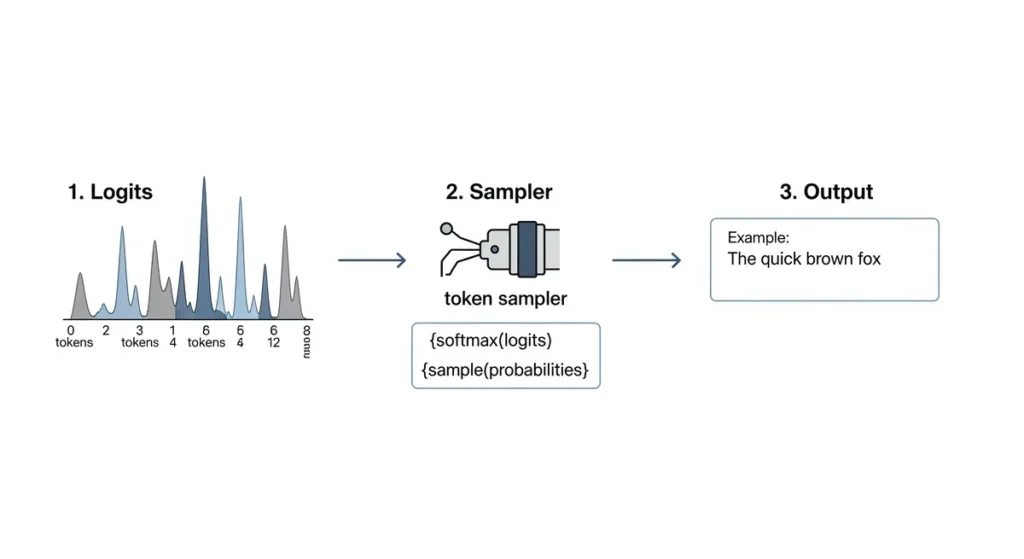

For text, SynthID manipulates the AI model’s token selection process during generation. Here’s how it works:

The system adjusts probability scores (called logits) for different word choices in a statistically subtle but trackable way. According to research published by Google DeepMind, this creates patterns that are invisible to humans but detectable when you analyze the expected probability distributions.

Example: When generating the phrase “The weather is,” the AI might normally assign equal probability to words like “sunny,” “cloudy,” or “rainy.” SynthID slightly shifts these probabilities in a specific pattern that creates the watermark signature.

Images, Audio, and Video: Pixel-Level Precision

For visual and audio media, SynthID takes a different approach:

- Images: Subtly modifies pixel values in ways that survive compression and basic editing

- Audio: Adjusts audio samples using techniques that remain intact through normal playback and sharing

- Video: Combines both image and audio watermarking methods for comprehensive coverage

The watermarks are engineered to be robust against common edits like cropping, compression, or format conversion—though they’re not bulletproof.

Can SynthID Stop AI Fakes? Real-World Performance Data

The scale of SynthID deployment is staggering. Google claims to have watermarked over 10 billion pieces of AI-generated content since the 2023 launch. That’s not just a proof of concept—it’s a live experiment happening across the internet right now.

According to early third-party tests from Q2 2025, SynthID successfully detected watermark presence 90-95% of the time in unedited outputs. However, effectiveness degraded to under 65% with heavy paraphrasing or translation attempts.

Studies suggest the watermarking doesn’t degrade content quality or creativity for users, which is critical for adoption. Nobody wants watermarking that makes their AI tools less effective.

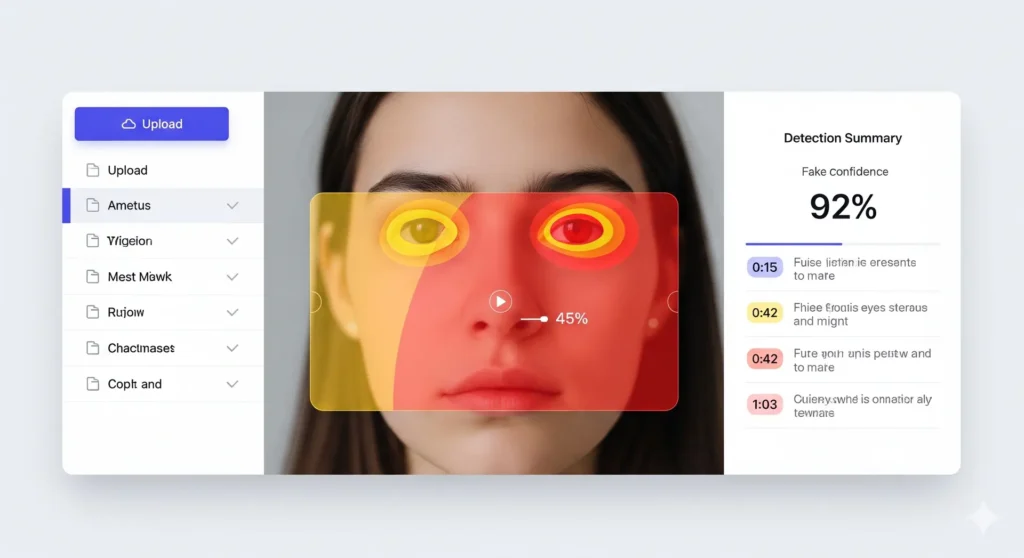

The SynthID Detector: Your Content Verification Tool

Google introduced the SynthID Detector at I/O 2025, giving users a direct way to verify content authenticity. The tool highlights exactly which parts of uploaded content carry watermarks, providing transparency that’s crucial for journalists and researchers.

Early testers report the system works well for detecting Google-generated content, though it obviously can’t identify content from other AI providers like OpenAI or Anthropic.

Is SynthID Reliable for Journalists in 2025?

Where SynthID Excels

Model-Specific Accuracy: For Google-generated content, SynthID provides cryptographic certainty rather than statistical guessing. This makes it significantly more reliable than traditional AI detection tools, which have notoriously high false positive rates.

Multi-Format Coverage: Unlike detection tools that focus solely on text, SynthID works across images, audio, video, and text—making it valuable for multimedia verification.

Critical Limitations Journalists Must Know

Coverage Gap: SynthID only works on content generated by Google’s AI models or select partners. If you’re investigating content from ChatGPT, Claude, or any other AI system, SynthID won’t detect it. This creates a massive blind spot in content verification.

Content Domain Challenges: The technology struggles with high-factuality text where word choices are limited and predictable. According to MIT Technology Review, watermarking works better for creative content like stories than for factual reporting where language options are constrained.

Bypass Methods: Intensive editing, translation, or adversarial attacks can erase or bypass watermarks. Determined bad actors have multiple ways to strip these protections, limiting effectiveness against sophisticated misinformation campaigns.

Does Google’s SynthID Watermark Meet EU AI Act Compliance?

The regulatory landscape is driving watermarking adoption. The EU AI Act requires AI content labeling, while California’s SB-798 mandates disclosure for AI-generated political content. UNESCO and OECD guidelines also emphasise watermarking and provenance as global governance priorities.

SynthID positions Google ahead of these requirements, but the regulations will likely accelerate industry-wide adoption of compatible standards.

Practical Implication: If you’re using Google’s AI models, your content is already being watermarked whether you know it or not. This transparency can actually work in your favour—it provides proof that you’re following emerging AI disclosure requirements.

Also Read: 5 Powerful Reasons to Choose Google Imagen 4 AI Generator

Is SynthID the Best Solution to Stop Deepfakes?

While SynthID represents a significant technological advancement, it’s not a silver bullet for the deepfake problem. The system’s effectiveness depends on several factors:

Strengths:

- Provides cryptographic proof for Google-generated content

- Works across multiple media formats

- Doesn’t compromise content quality

- Scales to billions of pieces of content

Limitations:

- Only covers Google’s AI ecosystem

- Vulnerable to determined bypass attempts

- Ineffective against non-watermarked AI content

- Requires industry-wide adoption for maximum impact

The reality is that stopping deepfakes requires a multi-layered approach combining watermarking, detection models, platform policies, and user education.

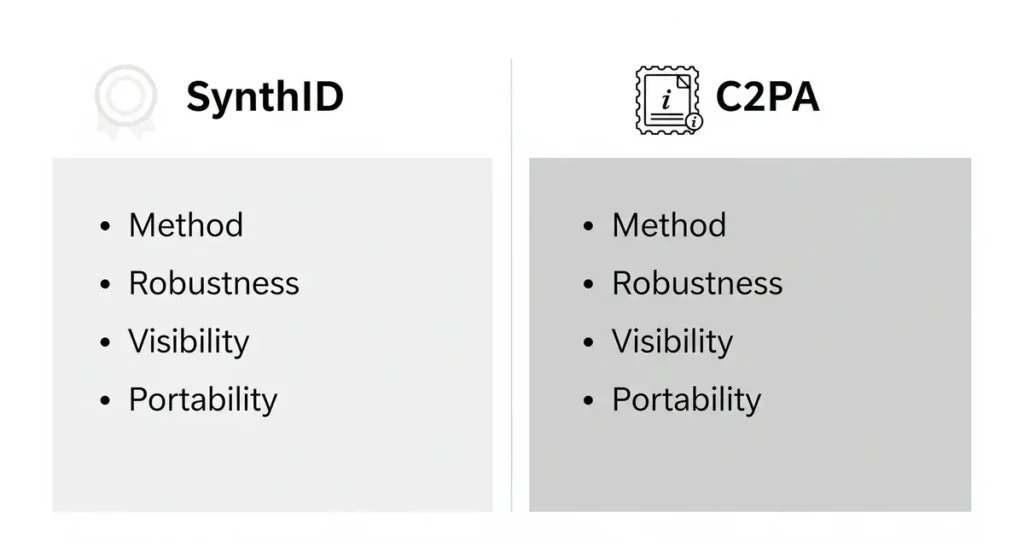

SynthID vs C2PA: Which AI Watermarking Standard Will Win?

Google isn’t keeping SynthID to itself. The company is now aligning SynthID with C2PA (Coalition for Content Provenance and Authenticity) standards, recognising that watermarking only works if it becomes an industry standard rather than a proprietary advantage.

| Approach | Method | Advantages | Limitations |

|---|---|---|---|

| SynthID | Embeds watermarks directly into content during generation | Invisible, robust against basic editing | Model-specific, can be bypassed |

| C2PA | Attaches metadata and cryptographic signatures to files | Universal compatibility, detailed provenance | Visible metadata, easily stripped |

The convergence suggests we’re heading toward hybrid solutions that combine both methods for comprehensive content provenance.

Open-Source AI Watermarking 2025: What Changed

As of 2024, Google made the SynthID text watermarking component open-source, part of their Responsible AI toolkit. This move signals Google’s bet on standardisation over competitive advantage.

The open-source release means other AI providers can implement compatible watermarking, potentially solving the coverage problem that limits SynthID’s current effectiveness.

Adoption Status Update: As of mid-2025, no major competitor has adopted SynthID’s open-source watermark. Industry adoption remains uncertain, though regulation may force convergence around a standard.

How SynthID Compares to Competitor Detection Methods

Traditional AI Detectors

- Method: Analyze writing patterns and statistical signatures

- Accuracy: Notoriously unreliable with high false positive rates

- Coverage: Attempt to identify any AI content

- Reliability: Nearly useless for serious applications

OpenAI’s Approach

- Current Status: No watermarking standard implemented

- Strategy: Exploring provenance tagging and model disclosure

- Timeline: No public roadmap for watermarking

Meta’s AudioSeal (2024)

- Focus: Audio watermarking specifically

- Status: Testing phase

- Integration: Limited to Meta’s audio AI models

Anthropic’s Strategy

- Watermarking: No current implementation

- Focus: Model disclosure and usage policies

- Approach: Transparency over technical solutions

SynthID stands out as the most comprehensive watermarking system currently deployed at scale, though its model-specific limitations remain a significant constraint.

Getting Started with SynthID Watermarking

Want to test the technology yourself? Google provides a verification portal where you can upload content to check for watermarks. It’s free and requires no technical expertise.

For developers interested in implementation, Google offers official documentation that explains integration with their AI services.

The technology is already embedded in Google’s consumer AI products, so if you’re using Gemini for writing or Imagen for images, your outputs are automatically watermarked.

Pros and Cons of Invisible Watermarks vs Detection Models

Invisible Watermarks (SynthID Approach)

Pros:

- Cryptographic certainty for marked content

- No false positives for watermarked material

- Works across multiple content formats

- Doesn’t degrade content quality

Cons:

- Only works on participating AI models

- Can be bypassed with sufficient effort

- Requires industry coordination for effectiveness

Detection Models (Traditional Approach)

Pros:

- Attempts to identify any AI-generated content

- Works retroactively on existing content

- No need for watermarking at generation time

Cons:

- High false positive rates

- Unreliable for serious applications

- Easily fooled by simple modifications

- Performance degrades as AI models improve

The evidence suggests watermarking will become the dominant approach, with detection models serving as backup for unwatermarked content.

2026 Outlook: The Future of AI Content Authentication

Looking ahead to 2026 and beyond, several trends will likely shape the watermarking landscape:

Regulatory Acceleration: EU AI Act enforcement and similar regulations worldwide will push more companies toward watermarking adoption, potentially making SynthID or C2PA-compatible solutions mandatory for certain applications.

Industry Convergence: While SynthID currently leads in deployment scale, the future may belong to hybrid solutions that combine Google’s invisible watermarking with C2PA’s metadata approach for comprehensive content provenance.

Technical Arms Race: As watermarking becomes more widespread, bypass techniques will become more sophisticated, driving continuous improvement in robustness and detection methods.

Platform Integration: Major social media platforms and content management systems will likely integrate watermark detection as a standard feature, making authenticity verification seamless for end users.

The question isn’t whether SynthID will remain dominant, but whether the industry can achieve the coordination necessary to make any watermarking standard truly effective against determined bad actors.

Also Read: 6 Eye-Opening Facts About Duolingo’s Bold AI-First Shift

Key Takeaways

- SynthID embeds invisible watermarks directly into Google’s AI-generated content during creation, providing cryptographic proof of origin rather than statistical guessing

- Scale and open-source strategy matter: Google has watermarked 10+ billion pieces of content and made text watermarking open-source to drive industry adoption

- Significant limitations remain: only works on Google’s AI models, struggles with factual text, and can be bypassed through intensive editing or adversarial methods

- Regulatory compliance advantage: SynthID positions users ahead of EU AI Act and U.S. state requirements for AI content disclosure

Conclusion: The Future of Digital Content Authenticity

SynthID watermarking represents the first major step toward industry-wide content provenance standards, but it won’t solve AI misinformation alone.

The future lies in hybrid solutions combining watermarking with metadata provenance, model disclosure policies, and real-time detection systems.

Google’s open-source strategy suggests the industry is converging toward collaborative standards rather than fragmented proprietary solutions.

For content creators and platform managers, this means watermarking will become essential infrastructure—not optional technology.

The question isn’t whether watermarking will become standard—it’s whether you’ll be ready when it does.

FAQs:

Q1: How does Google’s SynthID watermark work without affecting content quality?

A1: SynthID embeds watermarks at the generation level by subtly adjusting probability distributions for word choices in text, or pixel/audio values in media. These changes are statistically detectable but imperceptible to human users, maintaining full content quality.

Q2: Can SynthID detect AI content from ChatGPT, Claude, or other non-Google models?

A2: No. SynthID only works on content generated by Google’s AI models (Gemini, Imagen, Veo, Lyria) or select partners. It won’t detect content from OpenAI, Anthropic, or other AI providers, creating significant coverage gaps.

Q3: Is the open-source SynthID watermarking available for commercial use?

A3: Yes. Google made the text watermarking component open-source in 2024 as part of their Responsible AI toolkit. Developers can integrate it into their own AI systems, though Google’s full implementation remains integrated with their AI services.

Q4: How reliable is SynthID against sophisticated bypass attempts?

A4: Early testing shows 90-95% detection accuracy for unedited content, dropping to under 65% with heavy paraphrasing or translation. Determined actors can strip watermarks through adversarial methods, intensive editing, or content transformation, limiting effectiveness against sophisticated attacks.

Q5: Will other AI companies adopt SynthID-compatible watermarking?

A5: Google is aligning SynthID with C2PA industry standards and made the technology open-source to encourage adoption. However, competitors like OpenAI and Anthropic haven’t committed to compatible watermarking, though regulatory pressure may accelerate industry coordination.

Also Read: Swipe Left to Delete? How Google Photos is Borrowing Tinder’s Famous Feature

Disclaimer

We share news and updates from official sources and trusted websites. Sometimes details change after publishing. Some products or services we write about might be paid or need subscription. Please check info from the official website before buying or investing. We do not have any conflict of interest with any company mentioned. We are not responsible for any decision you take based on our article. Always do your own research. All names, logos and brands belong to their owners.